Core Surgical Training Curriculum

5 Programme of Assessment

5.1 Purpose of assessment

Assessment of learning is an essential component of any curriculum. This section describes the assessment system and the purpose of its individual components which are blueprinted to the curriculum as shown in appendix 8. The focus is on good practice, based on fair and robust assessment principles and processes in order to ensure a positive educational impact on learners and to support assessors in making valid and reliable judgements. The programme of assessment comprises an integrated framework of examinations, assessments in the workplace and judgements made about a learner during their approved programme of training. Its purpose is to robustly evidence, ensure and clearly communicate the expected levels of performance at critical progression points in, and to demonstrate satisfactory completion of, training as required by the curriculum. The programme of assessment is shown in figure 3 below.

Assessments can be described as helping learning or testing learning - referred to as formative and summative respectively. There is a link between the two; some assessments are purely formative (shown in green in figure 3), others are explicitly summative with a feedback element (shown in blue) while others provide formative feedback while contributing to summative assessment (shown in orange).

The purposes of formative assessment are to:

- assess trainees’ actual performance in the workplace.

- enhance learning by enabling trainees to receive immediate feedback, understand their own performance and identify areas for development.

- drive learning and enhance the training process by making it clear what is required of trainees and motivating them to ensure they receive suitable training and experience.

- enable supervisors to reflect on trainee needs in order to tailor their approach accordingly.

The purposes of summative assessment are to:

- provide robust, summative evidence that trainees are meeting the curriculum requirements during the training programme.

- ensure that trainees possess the essential underlying knowledge required for their specialty, including the GPCs to meet the requirements of GMP.

- inform the ARCP, identifying any requirements for targeted or additional training where necessary and facilitating decisions regarding progression through the training programme.

- identify trainees who should be advised to consider changes of career direction.

- provide information for the quality assurance of the curriculum.

Figure 3 - Assessment framework

5.2 Delivery of the programme of assessment

The programme of assessment is comprised of several different types of assessment needed to meet the requirements of the curriculum. These together generate the evidence required for global judgements to be made about satisfactory trainee performance, progression in, and completion of, training. These include the Intercollegiate Committee for Basic Surgical Examinations (ICBSE) assessments leading to the award of the MRCS, and WBAs. The primary assessment in the workplace is the MCR, which, together with other portfolio evidence, contributes to the AES report for the ARCP. Central to the assessment framework is professional judgement. Assessors are responsible and accountable for these judgements and these judgements are supported by structured feedback to trainees. Assessment takes place throughout the training programme to allow trainees to continually gather evidence of learning and to provide formative feedback to the trainee to aid progression.

Reflection and feedback are also integral components of all WBAs. In order for trainees to maximise the benefit of WBA, reflection and feedback should take place as soon as possible after an event. Feedback should be of high quality that should include a verbal dialogue between trainee and assessor in reflection on the learning episode, attention to the trainee’s specific questions, learning needs and achievements as well as an action plan for the trainee’s future development. Both trainees and trainers should recognise and respect cultural differences when giving and receiving feedback11. The assessment framework is also designed to identify where trainees may be running into difficulties. Where possible, these are resolved through targeted training, practise and assessment with specific trainers and, if necessary, with the involvement of the AES and TPD to provide specific remedial placements, additional time and additional resources.

5.3 Assessment framework components

Each of the components of the assessment framework is described below.

5.3.1 The sequence of assessment

Training and assessment take places within placements of typically six or twelve months’ duration throughout Core Surgical Training (figure 4). Assessments are carried out by relevant qualified members of the trainee’s multi-professional team whose roles and responsibilities are described in appendix 5. Trainee progress is monitored primarily by the trainee’s AES through learning agreement meetings with the trainee. Throughout the placement trainees must undertake WBAs while ICBSE examinations are undertaken before the end of the programme. The trainee’s CSs must assess the trainee on the five CiPs and nine GPC domains using the MCR. This must be undertaken towards the mid-point of each placement in a formative way and at the end of the placement when the formative assessment will contribute to the AES’s summative assessment at the final review meeting of the learning agreement. The placement culminates with the AES report of the trainee’s progress for the ARCP. The ARCP makes the final decision about whether a trainee can progress to the next level or complete training. It bases its decision on the evidence that has been gathered in the trainee’s learning portfolio during the period between ARCP reviews, particularly the AES report in each training placement.

Figure 4 - the sequence of assessment through a placement.

5.3.2 The learning agreement

The learning agreement is a formal process of goal setting and review meetings that underpin training and is formulated through discussion. The process ensures adequate supervision during training, provides continuity between different placements and supervisors and is one of the main ways of providing feedback to trainees. There are three learning agreement meetings in each placement. Any significant concerns arising from the meetings should be fed back to the TPD at each point in the learning agreement.

Objective-setting meeting

At the start of each placement the AES and trainee must meet to review the trainee’s progress so far, agree learning objectives for the placement ahead and identify the learning opportunities presented by the placement. The learning agreement is constructively aligned towards achievement of the high-level outcomes (the CiPs and GPCs) and, therefore, the CiPs and GPCs are the primary reference point for planning how trainees will be assessed and whether they have attained the learning required. The learning agreement is also tailored to the trainee’s progress, phase of training and learning needs. The final MCR from the previous placement will be reviewed alongside the most recent trainee self-assessment and the action plan for training. Any specific targeted training objectives from the previous ARCP should also be considered and addressed though this meeting and form part of the learning agreement.

Mid-point review meeting

A meeting between the AES and the trainee must take place at the mid-point of a placement (or each three months within a placement that is longer than six months). The learning agreement must be reviewed, along with other portfolio evidence of training such as WBAs, the logbook and the formative mid-point MCR, including the trainee’s self-assessment. This meeting ensures training opportunities appropriate to the trainee’s own needs are being presented in the placement and are adjusted if necessary, in response to the areas for development identified through the MCR. Particular attention must be paid to progress against targeted training objectives and a specific plan for the remaining part of the placement made if these are not yet achieved. There should be a dialogue between the AES and CSs if adequate opportunities have not been presented to the trainee, and the TPD informed if there has been no resolution. Discussion should also take place if the scope and nature of opportunities should change in the remaining portion of the placement in response to areas for development identified through the MCR.

Final review meeting

Shortly before the end of each placement trainees should meet with their AES to review portfolio evidence including the final MCR. The dialogue between the trainee and AES should cover the overall progress made in the placement and the AES’s view of the placement outcome.

AES report

The AES must write an end of placement report which informs the ARCP. The report includes details of any significant concerns and provides the AES’s view about whether the trainee is on track in the phase of training for completion within the indicative time. If necessary, the AES must also explain any gaps and resolve any differences in supervision levels which came to light through the MCR.

5.3.3 The Multiple Consultant Report

The assessment of the CiPs and GPCs (high-level outcomes of the curriculum) involves a global professional judgement of a range of different skills and behaviours to make decisions about a learner’s suitability to take on particular responsibilities or tasks that are essential to the role of the phase 2 surgical trainee. The MCR assessment must be carried out by the consultant CSs involved with a trainee, with the AES contributing as necessary to some domains (e.g. Quality Improvement, Research and Scholarship). The number of CSs taking part reflects the size of the specialty unit and is expected to be no fewer than two. The exercise reflects what many consultant trainers do regularly as part of a faculty group.

The MCR includes a global rating in order to indicate how the trainee is progressing in each of the five CiPs. This global rating is expressed as a supervision level recommendation described in table 3 above. Supervision levels are behaviourally anchored ordinal scales based on progression to competence and reflect a judgment that has clinical meaning for assessors. Using the scale, CSs must make an overall, holistic judgement of a trainee’s performance on each CiP. The levels set out in table 2 equate to the level required for entry to phase 2 of specialty training. Levels IV and V equate to the level required for certification and the level of practice expected of a day-one consultant in the Health Service (level IV) or beyond (level V). Although an essential feature of the supervision level scale in the ten surgical specialty curricula, they are omitted from this curriculum to avoid unrealistic expectations of core trainees.

Figures 5 and 6 show how the MCR examines performance from the perspective of the outcome of the curriculum, the day-one phase 2 surgical trainee, in the GPCs and CiPs. The MCR can identify areas for improvement by selecting appropriate CiP or GPC descriptors from drop down lists or, if further detail is required, through free text. The assessment of the GPCs can be performed by CSs, whilst GPC domains 6-9 might be more relevant to assessment by the AES in some placements.

CSs will be able to best recommend supervision levels because they observe the performance of the trainee in person on a day-to-day basis. The CS group, led by a Lead CS, should meet at the mid-point and towards the end of a placement to conduct a formative MCR. Through the MCR, they agree which supervision level best describes the performance of a trainee at that time in each of the five CiPs and also identify any areas of the nine GPC domains that require development. It is possible for those who cannot attend the group meeting, or who disagree with the report of the group as a whole, to add their own section (anonymously) to the MCR for consideration by the AES. The AES will provide an overview at the end of the process, adding comments and signing off the MCR.

The MCR uses the principle of highlight reporting, where CSs do not need to comment on every descriptor within each CiP but use them to highlight areas that are above or below the expected level of performance. The MCR can describe areas where the trainee might need to focus development or areas of particular excellence. Feedback must be given for any CiP that is not rated at the level set out in table 2 and in any GPC domain where development is required. Feedback must be given to the trainee in person after each MCR and, therefore, includes a specific feedback meeting with the trainee using the highlighted descriptors within the MCR and/or free text comments.

The mid-point MCR feeds into the mid-point learning agreement meeting. At the mid-point it allows goals to be agreed for the second half of the placement, with an opportunity to specifically address areas where development is required. Towards the end of the placement the MCR feeds into the final review learning agreement meeting, helping to inform the AES report (figure 4). It also feeds into the objective-setting meeting of the next placement to facilitate discussion between the trainee and the next AES.

The MCR, therefore, gives valuable insight into how well the trainee is performing, highlighting areas of excellence, areas of support required and concerns. It forms an important part of detailed, structured feedback to the trainee at the mid-point, and before the end of the placement, and can trigger any appropriate modifications for the focus of training as required. The final formative MCR, together with other portfolio evidence, feeds into the AES report which in turn feeds into the ARCP. The ARCP uses all presented evidence to make the definitive decision on progression.

Table 3 - MCR anchor statements and guide to recommendation of appropriate supervision level in each CiP.

| MCR Rating Scale (CiPs) |

Anchor statements |

Trainer input at each supervision level |

| Does the trainee perform part or all* of the task? |

Is guidance required? |

Is it necessary for a trainer to be present for the task? |

| Supervision Level Ia: |

Able to observe passively only |

no |

n/a |

throughout |

| Supervision Level Ib: |

Able to observe actively: may engage in the activity to provide assistance or analyse and discuss what is observed |

no |

throughout |

throughout |

| Supervision Level IIa: |

Able and trusted to act with direct supervision: some of the activity is performed by the trainee |

yes, elements only |

all aspects |

throughout |

| Supervision Level IIb: |

Able and trusted to act with direct supervision: the trainee is able to string elements together into fluent parts of the task |

yes, fluent parts; most of the task |

all aspects |

present for most of the task and available to be present as soon as required throughout |

| Supervision Level IIc |

Able and trusted to act with direct supervision: the trainee is able to complete the task |

yes, all of the task |

all aspects |

present for part of the task and available to be present throughout |

| Supervision Level III: |

Able and trusted to act with indirect supervision: the supervisor will want to provide guidance for, and oversight of most aspects of the activity. Guidance may be remote or provided in advance of the activity |

yes, all of the task |

at least some aspects |

available to attend in the event of particular challenge |

* It is not anticipated that any element of the tasks will be acquired to independence during Core Surgical Training, and some elements, particularly around executive decision-making and office-based administration, may not be engaged with at all during these early years.

In making a supervision level recommendation, CSs should take into account their experience of working with the trainee and the degree of autonomy they were prepared to give the trainee during the placement. They should also take into account all the descriptors of the activities, knowledge and skills listed in the detailed descriptions of the CiPs. The CSs should indicate which of the descriptors of the activities, knowledge and skills require further development (to a limit of five items per CiP, so as to allow targets set at feedback to be timely, relevant and achievable). If a trainee excels in one or more areas, the relevant descriptors should be indicated. Examples of how the online MCR will look are shown in Figures 5 and 6. Figure 7 describes the MCR as an iterative process involving the trainee, CSs, the AES and the development of specific, relevant, timely and achievable action plans.

Multiple Consultant Report – assessment of the GPCs

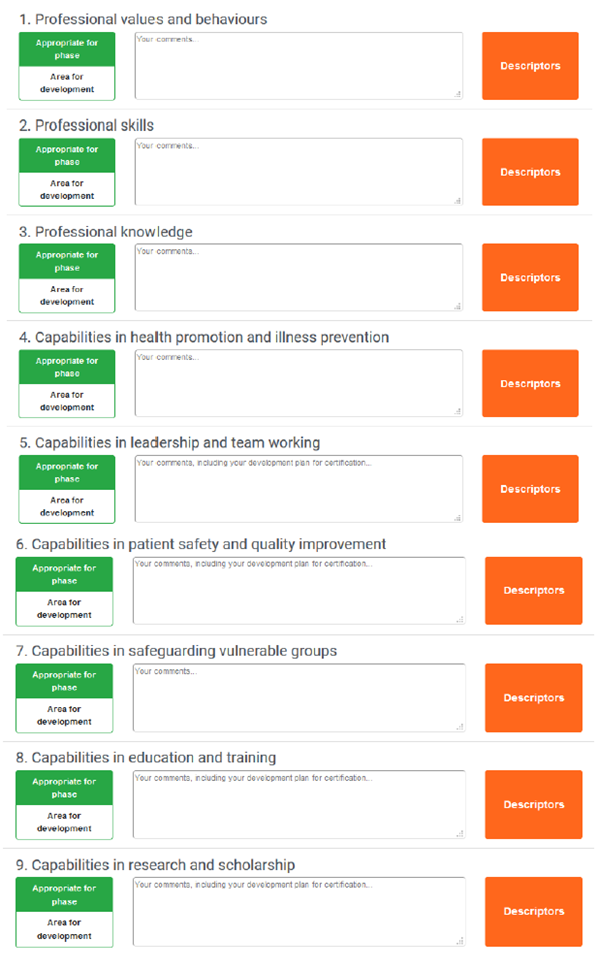

Figure 5 - An example of how the GPCs are assessed through the MCR. CSs would consider whether there are areas for development in any of the nine GPC domains. If not, then nothing further need be recorded. If there are areas for development identified, then CSs are obliged to provide feedback through the MCR. This feedback can be recorded as free text in the comments box indicated. The Descriptors box expands to reveal descriptors taken from the GPC framework. These can be used as prompts for free text feedback or verbatim as standardised language used to describe professional capabilities.

Multiple Consultant Report – assessment of the CiPs

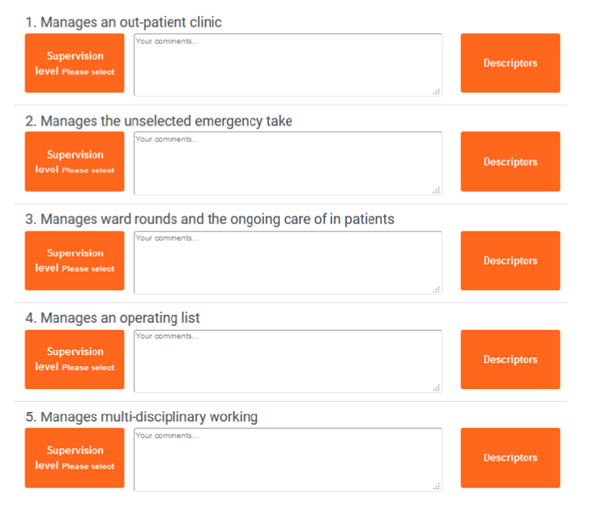

Figure 6 - An example of how the CiPs are assessed through the MCR. CSs would decide what supervision level to recommend for each of the CiPs and record this for each through the Supervision Level box. Trainers are obliged to provide feedback through the MCR in the comments box provided. The Descriptors box expands to reveal CiP descriptors. These can be used as prompts for free text feedback or verbatim as standardised language to describe the clinical capabilities.

5.3.4 Trainee Self-assessment

Trainees should complete the self-assessment in the same way as CSs complete the MCR, using the same form and describing self-identified areas for development with free text or using CiP or GPC descriptors. Reflection for insight on performance is an important development tool and self-recognition of the level of supervision needed at any point in training enhances patient safety. Self-assessments are part of the evidence reviewed when meeting the AES at the mid-point and end of a placement. Wide discrepancy between the self-assessment and the recommendation by CSs in the MCR allows identification of over or under confidence and for support to given accordingly.

Figure 7 - The iterative process of the MCR, showing the involvement of CSs, self-assessment by trainees, face to face meeting between trainees and supervisors and the development of an action plan focused on identified learning needs over the next three to six months of training. Progress against these action plans is reviewed by AES and at the subsequent MCRs.

5.3.5 Workplace-based assessment (WBA)

Each individual WBA is designed to assess a range of important aspects of performance in different training situations. Taken together the WBAs can assess the breadth of knowledge, skills and performance described in the curriculum. They also constructively align with the clinical CiPs and GPCs as shown in appendix 8 and will be used to underpin assessment in those areas of the syllabus central to core surgical training i.e. the critical skills, as well as being available for other conditions and operations as determined by the trainee and supervisors and especially where needed in the assessment of a remediation package to evidence progress in areas of training targeted by a non-standard ARCP outcome. The WBAs described in this curriculum have been in use for over ten years and are now an established component of training.

The WBA methodology is designed to meet the following criteria:

- Validity – the assessment actually does test what is intended; that methods are relevant to actual clinical practice; that performance in increasingly complex tasks is reflected in the assessment outcome

- Reliability - multiple measures of performance using different assessors in different training situations produce a consistent picture of performance over time

- Feasibility – methods are designed to be practical by fitting into the training and working environment

- Cost-effectiveness – the only significant additional costs should be in the training of trainers and the time investment needed for feedback and regular appraisal, this should be factored into trainer job plans

- Opportunities for feedback – structured feedback is a fundamental component

- Impact on learning – the educational feedback from trainers should lead to trainees’ reflections on practice in order to address learning needs.

WBAs use different trainers’ direct observations of trainees to assess the actual performance of trainees as they manage different clinical situations in different clinical settings and provide more granular formative assessment in the crucial areas of the curriculum than does the more global assessment of CiPs in the MCR. WBAs are primarily aimed at providing constructive feedback to trainees in important areas of the syllabus throughout each placement in all phases of training. Trainees undertake each task according to their training phase and ability level and the assessor must intervene if patient safety is at risk. It would be normal for trainees to have some assessments which identify areas for development because their performance is not yet at the standard for the completion of that training.

Each WBA is recorded on a structured form to help assessors distinguish between levels of performance and prompt areas for their verbal developmental feedback to trainees immediately after the observation. Each WBA includes the trainee’s and assessor’s individual written comments, ratings of individual competencies (e.g. Satisfactory, Needs development or Outstanding) and global rating (using anchor statements mapped to phases of training). Rating scales support the drive towards excellence in practice, enabling learners to be recognised for achievements above the level expected for a level or phase of training. They may also be used to target areas of under-performance. As they accumulate, the WBAs for the critical skills also contribute to the AES report for the ARCP.

WBAs are formative and may be used to assess and provide feedback on all clinical activity. Trainees can use any of the assessments described below to gather feedback or provide evidence of their progression in a particular area. WBAs are only mandatory for the assessment of the critical skills (see appendix 3). They may also be useful to evidence progress in targeted training where this is required e.g. for any areas of concern.

WBAs for the critical skills will inform the AES report along with a range of other evidence to aid the decision about the trainee’s progress. All trainees are required to use WBAs to evidence that they have achieved the learning in the index procedures or critical conditions by certification. However, it is recognised that trainees will develop at different rates, and failure to attain a specific level at a given point will not necessarily prevent progression if other evidence shows satisfactory progress.

The assessment blueprint (appendix 8) indicates how the assessment programme provides coverage of the CiPs, the GPC framework and the syllabus. It is not expected that the assessment methods will be used to evidence each competency and additional evidence may be used to help make a supervision level recommendation. The principle of assessment is holistic; individual GPC and CiP descriptors and syllabus items should not be assessed, other than in the critical skills or if an area of concern is identified. The programme of assessment provides a variety of tools to feedback to and assess the trainee.

Case Based Discussion (CBD)

The CBD assesses the performance of a trainee in their management of a patient case to provide an indication of competence in areas such as clinical judgement, decision-making and application of medical knowledge in relation to patient care. The CBD process is a structured, in-depth discussion between the trainee and a consultant supervisor. The method is particularly designed to test higher order thinking and synthesis as it allows the assessor to explore deeper understanding of how trainees compile, prioritise and apply knowledge. By using clinical cases that offer a challenge to trainees, rather than routine cases, trainees are able to explain the complexities involved and the reasoning behind choices they made. It also enables the discussion of the ethical and legal framework of practice. It uses patient records as the basis for dialogue, for systematic assessment and structured feedback. As the actual record is the focus for the discussion, the assessor can also evaluate the quality of record keeping and the presentation of cases. Trainees are assessed against the standard for the completion of core surgical training.

Clinical Evaluation Exercise (CEX) / CEX for Consent (CEX(C))

The CEX or CEX(C) assesses a clinical encounter with a patient to provide an indication of competence in skills essential for good clinical care such as communication, history taking, examination and clinical reasoning. These can be used at any time and in any setting when there is a trainee and patient interaction and an assessor is available. The CEX is used in this curriculum to assess the clinical critical skills. Trainees are assessed against the standard for the completion of core surgical training as detailed in appendix 3.

Direct Observation of Procedural Skills (DOPS)

The DOPS assesses the trainee’s technical, operative and professional skills in a range of basic diagnostic and interventional procedures during routine surgical practice in wards, out-patient clinics and operating theatres. The DOPS is used in this curriculum to assess the technical critical skills. Trainees are assessed against the standard for the completion of core surgical training as detailed in appendix 3.

Multi-source Feedback (MSF)

The MSF assesses professional competence within a team working environment. It comprises a self-assessment and the assessments of the trainee’s performance from a range colleagues covering different grades and environments (e.g. ward, theatre, out-patients) including the AES. The competencies map to the standards of GMP and enable serious concerns, such as those about a trainee’s probity and health, to be highlighted in confidence to the AES, enabling appropriate action to be taken. Feedback is in the form of a peer assessment chart, enabling comparison of the self-assessment with the collated views received from the team and includes their anonymised but verbatim written comments. The AES should meet with the trainee to discuss the feedback on performance in the MSF. Trainees are assessed against the standard for the completion of their training level.

Procedure Based Assessment (PBA)

The PBA assesses advanced technical, operative and professional skills in a range of specialty procedures or parts of procedures during routine surgical practice in which trainees are usually scrubbed in theatre. The assessment covers pre-operative planning and preparation; exposure and closure; intra-operative elements specific to each procedure and post-operative management. The standard is at the level of certification, way beyond the end-point of this core surgical training curriculum.

Surgical logbook

The logbook is tailored to each specialty and allows the trainee’s competence as assessed by DOPS and PBA to be placed in context. It is not a formal assessment in its own right, but trainees are required to keep a log of all operative procedures they have undertaken including the level of supervision required on each occasion using the key below. The logbook demonstrates breadth of experience which can be compared with procedural competence using the DOPS and the PBA and will be compared to the technical critical skills and indicative numbers as detailed in appendix 3.

Observed (O)

Assisted (A)

Supervised - trainer scrubbed (S-TS)

Supervised - trainer unscrubbed (S-TU)

Performed (P)

Training more junior trainee (T)

The following WBAs may also be used to further collect evidence of achievement, particularly in the GPC domains of Quality improvement, Education and training and Leadership and team working:

Assessment of audit (AoA)

The AoA reviews a trainee’s competence in completing an audit or quality improvement project. It can be based on documentation or a presentation of a project. Trainees are assessed against the standard for the completion of their phase of training.

Observation of teaching (OoT)

The OoT assesses the trainee’s ability to provide formal teaching. It can be based on any instance of formalised teaching by the trainee which has been observed by the assessor. Trainees are assessed against the standard for the completion of their phase of training.

The forms and guidance for each WBA method can be found on the ISCP website ISCP (see section 7).

5.3.6 The Intercollegiate Membership examination of the Royal Colleges of Surgeons (MRCS)

The MRCS examinations are required assessment components of core surgical training and evidence of completion of these is required before the award of an ARCP outcome 6. The MRCS, MRCS(ENT) and the Diploma in Otolaryngology – Head and Neck Surgery (DO-HNS) are governed by the ICBSE12 which develops, maintains and quality assures them on behalf of the four surgical royal colleges. Possession of DO-HNS without the MRCS is not an adequate qualification to satisfy the requirements of this curriculum. These examinations are a powerful driver for knowledge and clinical skill acquisition. The examination components have been chosen to test the application of knowledge, clinical skills, interpretation of findings, clinical judgement, decision-making, professionalism, and communication skills described within the curriculum. The examination also assesses components of the CiPs and GPCs (as shown in appendix 8).

There are two parts to the MRCS:

- Part A is a multiple-choice question (MCQ) examination consisting of two papers taken on the same day. Both are single best answer (SBA) papers designed to test the application of knowledge and clinical reasoning in basic sciences and principles of surgery in general.

- Part B is a 17-station objective structured clinical examination (OSCE). The 17 stations cover anatomy, pathology and surgical science and critical care (the ‘knowledge’ content area) and communication skills, physical examination and procedural skills (the ‘skills’ content area).

Standard setting:

- Part A is standard set by the modified Angoff method, with an Angoff standard setting meeting held every three years. Standards are maintained at examination sittings between these meetings by using ‘marker’ questions which inform the standard setting group how well a cohort of candidates is performing compared to previous cohorts. Any questions identified as problematic by statistical analysis are discussed at the standard setting meeting after each exam and, if necessary, removed.

- Part B is standard set as follows: a score of 0-20 is provided on the candidate’s performance in addition to a pass/borderline/fail global judgement for each station. The examination is separated in to two for the Knowledge and Skills scenarios. The standard setting process involves calculating the total mark for each individual scenario over all UK diets going back to February 2013. If the total number of occasions that the scenario was used was greater than 500, only the most recent 500 results are considered in the calculation. Only scenarios that had not been significantly altered during this period are included in the calculation. The pass mark for each circuit is, therefore, generated by compiling the pass marks of the individual scenarios in each circuit. Pass marks are, therefore, generated for both ‘knowledge’ and ‘skills’ scenarios and the standard error of measurement (SEM) is added. The pass mark is calculated in advance of the meeting and the committee are required to consider the effect of external factors highlighted in the feedback (examiner/candidate behaviour or scenario performance) that may have influenced the exam when agreeing the final pass mark for each circuit.

Candidates feedback:

- Part A provides the maximum possible score, the score required to pass and the candidate’s own score. Candidates receive:

- the maximum possible score

- their own score

- the average of all candidates’ scores for sub-sections within the exam (anatomy, physiology and pharmacology, pathology and microbiology, common surgical conditions, perioperative management, trauma)

- Part B provides the candidate’s overall result, the candidate’s mark for knowledge, the mark required to pass knowledge, the candidate’s mark for skills, the mark required to pass skills. Candidates receive:

- the maximum mark available for the four sub-sections of the exam (anatomy and surgical pathology, applied surgical science and critical care, communication skills, and clinical and procedural skills)

- their own mark

- the mean mark for all candidates

- the maximum mark available for the domains of the exam (clinical knowledge and its application, clinical and technical skill, communication, and professionalism)

- their own mark

- the mean mark for all candidates

Attempts

Trainees have a maximum of six attempts at the first section and four attempts at the second section of the examination with no re-entry. A pass in section 1 is required to proceed to section 2 and must be achieved within two years of the first attempt. The GMC sets a time limit for completion of the entire examination process of seven years. Pro-rata adjustments are permissible to these timescales for LTFT trainees.

5.3.7 Trauma certification

Satisfactory and contemporary completion of a standard trauma course with current provider status at the point of final ARCP is required for satisfactory completion of the Core Surgical Training curriculum. A valid certificate may be achieved through the Advanced Trauma Life Support (ATLS®), Advanced Paediatric Life Support (APLS®), European Trauma Course, Battlefield Advanced Trauma Life Support (BATLS) or equivalent.

5.3.9 Annual Review of Competence Progression (ARCP)

The ARCP is a formal Deanery/HEE Local Office process overseen and led by the TPD. It scrutinises the trainee’s suitability to progress through the training programme. It bases its decisions on the evidence that has been gathered in the trainee’s learning portfolio during the period between ARCP reviews, particularly the AES report in each training placement. The ARCP would normally be undertaken on an annual basis for all trainees in surgical training. A panel may be convened more frequently for an interim review or to deal with progression issues (either accelerated or delayed) outside the normal schedule. The ARCP panel makes the final summative judgement on the trainee’s supervision level for each learning outcome and determines whether trainees are making appropriate progress through the phase of training within the indicative time for that phase.

5.4 Completion of Core Surgical Training

The following requirements are applied to all trainees completing this Core Surgical Training curriculum.

Trainees must:

a) be fully registered with the GMC and have a licence to practise (UK trainees)

b) have successfully completed the MRCS or MRCS(ENT) examination

c) have achieved the required supervision levels listed in section 3.4, table 2 in all the CiPs

d) have demonstrated the GPCs as appropriate to the phase of training

e) have achieved the required level of competence in the critical skills as evidenced through the appropriate WBAs (section 3.5.2 and appendix 3)

f) be in possession of in date certification through an approved trauma course (section 5.3.7)

g) have been awarded an outcome 6 at a final ARCP (or an outcome 1 in run-through programmes).

A final ARCP panel should be guided by the decision matrix below (table 4) in considering the award of an outcome 6 (1 in run-through programmes):

Table 4 – an ARCP panel guide for Core Surgical Training

| Syllabus area |

Required evidence |

Suggested Evidence |

| Common content module |

Certificate of completion of MRCS or MRCS(ENT)

Mandatory WBAs (appendix 3)

Current approved trauma provider status (section 5.3.7)

Completed AES report and at least one CS report from each placement

Up to date logbook

MSF from each whole time equivalent (WTE) training year

MCR from each placement

|

Logbook evidence of >120 cases per year

WBA portfolio1 covering particular areas of interest as agreed with AES, or to evidence progress in targeted training areas as required by a previous ARCP panel |

| Core specialty modules |

Completion of at least one module2 |

Logbook, WBA portfolio1 and CS report covering specified syllabus areas – see syllabus for details |

| ST3 preparation modules |

Completion of one module2

Final MCR showing capability at required supervision levels or better (section 3.4, table 2) |

Logbook, WBA portfolio1 and CS report covering specified syllabus areas – see syllabus for details |

| Annual appraisal |

Completed enhanced Form R or equivalent |

Engagement with training programme3 |

| Teaching and training |

|

Evidence of teaching delivery within AES report, other evidence or as OOTs |

| Keeping up to date and understanding how to analyse information |

|

Evidence of engagement with audit, medical literature and guidelines within AES report, other evidence or as AoAs |

| Leadership |

|

Evidence of engagement with local clinical governance and faculty groups within AES report or other evidence |

1Aside from the mandatory WBAs, no minimum number of WBAs is specified by this Core Surgical Training curriculum. Trainees may agree to complete WBAs in areas of interest with their AES, or be required to complete a series of WBAs in targeted areas of training by an ARCP panel.

2It is to be hoped that the AES final report will provide comment on whether this is the case.

3Details of the requirements for annual appraisal and revalidation for doctors in training can be found at https://www.copmed.org.uk/publications/revalidation and https://www.gmc-uk.org/registration-and-licensing/managing-your-registration/revalidation/revalidation-requirements-for-doctors-in-training

11

Cultural awareness course

12 intercollegiate MRCS exams